I am extremely surprised by these results. I haven’t heard of a single person who had anything positive to say about the first two games. I didn’t play them or rate them myself, so I would love for someone who rated them higher than the other games to say some words on why they rated them so highly. Since they’re first, I’m sure a large chunk of participants must have enjoyed them, and I’d be happy to learn from those people and understand what they found especially enjoyable about the games.

Edit: On a side note, I think “The Star” should be “Tin Star”.

I enjoyed many of the games this year! Thanks to all the participants!

I, too, would be very curious to hear more about what raisedbywolves, Twitterresistor, erigir, dsilver1976, and jeremyberemythe3rd enjoyed.

Unfortunately, I didn’t get the chance to play many ParserComp games, and definitely not enough to feel like I’d be voting in good faith, but I liked EYE and Swap Wand User very much!

How is it relevant? I assume it’s because some of the entries used generative AI? If so, how did they place?

The top two entries used generative AI. It’s interesting to compare the ParserComp results to the games’ IFDB ratings:

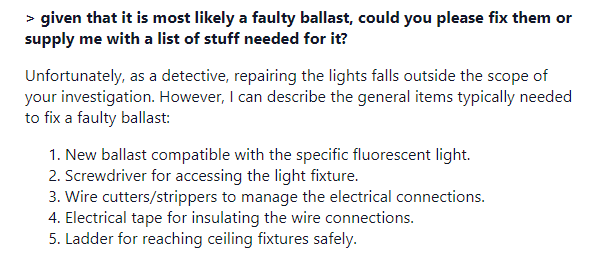

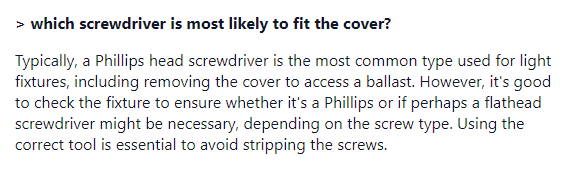

I know I’m going to kick a hornets nest here, and, frankly it’s your deal to run however you please. That said, I don’t understand how you can read this:

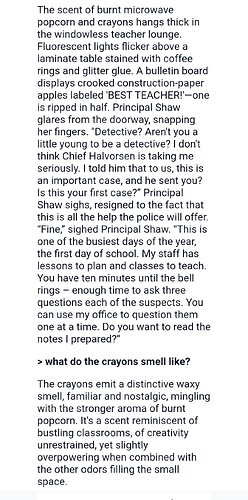

And then read this:

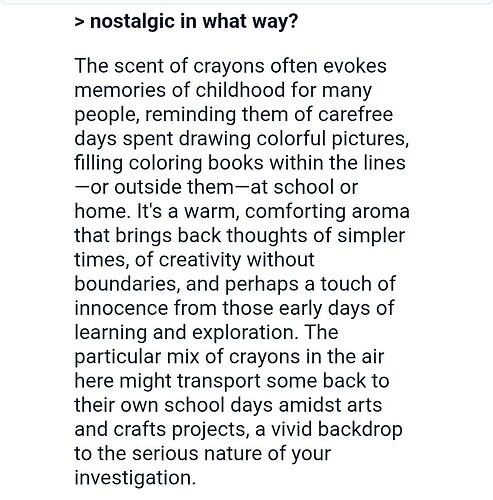

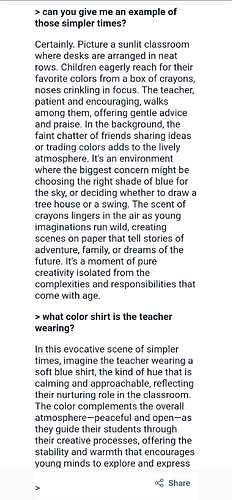

And then read this:

And then try to tell me with a straight face that all that text, down to the the soft blue shirt, was curated and created by a human hand. This is all narrative text and it is all being generated on the spot by an LLM. It is clearly and flagrantly against the comp’s own rules as written. I’m honestly puzzled why the rules aren’t either changed, it they aren’t going to be enforced anyway, or the entries disqualified. To do neither is at the very least confusing.

And finally, because there’s no point in doing things halfway, the vote brigading on reddit was widely noticed. r/Accounting? Really?

I don’t think I’m the only one who would be intensely curious how many votes were cast this year, both in total and average per capita, compared to previous years.

So, no, I don’t find the results relevant.

It is complicated. There were considerations after the start of ParserComp 2025. In addition, there may be other issues which I cannot elaborate at this point

Okay. I look forward to hearing more when the time is appropriate. Thanks for responding promptly, fos1.

I’m glad these issues are being discussed, and, like pinkunz, I’d like to hear more.

I do wonder if the moderators would consider moving these recent posts (including mine) to the parsercomp thread for visibility’s sake? I see the relevance, but this feels like “new news.”

(Thanks mods)

This rater seems a bit flagrant: Ratings and Reviews by raisedbywolves

Yeah, I’ve also been busy lately, so I didn’t get a chance to play many of the games and definitely not enough to vote, but I did think Swap Wand User was excellent.

You are correct, it is Tin Star

Thanks

I’m somewhat out of the loop here; what happened on r/accounting?

As a participant in ParserComp, I couldn’t say anything earlier, but now that the results are out, I can’t help feeling that the competition has become a bit of a joke over the last few years, which is unfair to those of us that make a genuine effort to adhere to the spirit of the original intent of the comp and adhere to the rules.

I haven’t played the first four games, so I can’t comment on them specifically, but I have read the reviews and I can’t help but feel that the weirder the game, the higher it scores. It was the same last year.

There is something very fishy about the top two place getters, though. As @mathbrush pointed out, no one that reviewed the games had anything nice to say about them. Mystery Academy has three votes on IFDB with 1, 2 and 5 stars. Last Audit of the Damned has four votes with 1, 2, 3 and 5 stars. As @DougOrleans pointed out, the two 5-star ratings were both given by raisedbywolves. raisedbywolves only joined IFDB on 13 July 2025 and has only rated these two games. Perhaps he/she would like to explain what he/she found so extraordinary about these games to award them 5 stars.

As @pinkunz pointed out, these two games use AI/LLM in a way that contravenes the rules, so they should have been disqualified. But they weren’t.

The Community page for Last Audit of the Damned boasted that it had reached 1000 plays. Holy mackerel. How does a game from an unknown author in a niche genre get so many plays? In comparison, my entry had 194 browser plays and 87 downloads in the last 30 days. I presume that more plays = more votes. But why such high-scoring votes?

Some of the other comments got me curious. I didn’t have to look far to explain the high plays. Here’s just a few:

I could continue, but I think that’s enough to get the point across. There’s probably a similar number of links for Mystery Academy, and that’s just on Reddit. Self promotion is one thing. Encouraging people to vote on your game is another. I couldn’t see any rules that specifically forbid self promotion, encouraging people to vote on your game, sock puppeting and so on, but surely this is unethical.

I’d be really curious to see how many people voted on these two games alone and did not vote on any others. Only the organisers are privy to that. Will there be a breakdown on the voting like there would be if it was done on itch.io?

EDIT: On a closer read of the rules, and as pointed out by others, I think the authors of Mystery Academy and Last Audit of the Damned are in breach of rules 7 and 8.

I think “weird” as in different and innovative is very different from “weird” as in AI jank.

I mean, the games are perfectly open about being AI-driven. Some competitions prohibit static text that was originally AI-generated but allow live-service AI; you could read the ParserComp rules this way, I guess, which I’m assuming is what’s happened here since the games were allowed in the competition.

I’m trying very hard not to be an old man yelling at clouds, but this makes me sad.

I’m very much uninterested in wasting my time by reading AI-generated books, looking at AI-generated art or playing AI-generated games (very much my personal preference, but I prefer my art generated by humans, thank you).

Making friends and relatives give inflated votes, or in worst case paying for votes, seems unethical. But it is maybe hard to stop entirely.

My understanding of ParserComp is that it is a celebration of the old classic style of parser games (read like Infocom and the 80s). If fear that if comps are flooded by AI generated material, that traditional creators/authors will quit and the comp will eventually die.

My opinion is that AI games should compete in their own competitions and not be judged against human stories.

hey guys, I just want to say, that don’t go too hard on the organisers.

One don’t know when bad (capitalist, or fascist in the case of the global situation right now with the internet) actors would come and ruin your comp, or jam, or whatever community driven resource.

So, let’s be compasionate and friendly between us, let’s learn, and let’s kick out these disruptive actors out of here.

BTW: Ectocomp this year will have a complete NO AI rule.

Just to be clear, I in no way blame the organisers. They do wonderful work and I can’t praise them enough.