Good Morning!

What a fun way to start the day. By way of introduction, I’m Michael M. I wrote all three of the thoughtauction games, and along with my partner, Chris, the genius tech lead, helped design the platform, Taleweaver++.

Let me make a few comments in sections

Why We Created Taleweaver++

Both Chris and I loved these games as kids (my favorite was Hamarubi, on the TRS-80), and Chris, growing up in rural Nevada with little to no TV reception, played all sorts of text adventure games on his Commodore 64.

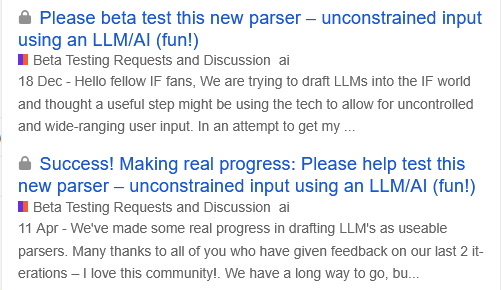

We originally set out to find a way to get our kids to appreciate the IF we loved growing up (granted, this was the Infocom era). But the kids just grew frustrated with the constraints of the parser. We tested old-style IF games in a high school CS class – and achieved a near-perfect abandonment rate, no one lasted more than 5 minutes, most a lot less. So we decided to try our hand at one that would accept conversational input. This is our 7th version of the product, and the fourth round of publicly released games.

But at the end of the day, we have only one goal: create games that are fun to play and stay in the spirit of the original text adventures that kept us glued to our TRS-80’s. So we appreciate what everyone is doing to keep this artform alive, and maybe even understand the hostility, but let us address a few issues.

Addressing the insults in this thread

I am upset that folks on this thread have chosen to insult us.

- We have tried to disclose as much as possible, every step of the way. And I have been very proactive in answering threads from fellow game developers and authors. When we approached the organizers of ParserComp, we not only explained how we worked, we showed it. We initially entered a game called Countdown City, but withdrew it on May 29th because we didn’t want to wait a month to start getting feedback on the platform. We released the game on Itch the next day, as well as announced it here, and got tons of feedback on Reddit and a very spirited discussion in this very forum. We certainly weren’t looking to misrepresent a thing.

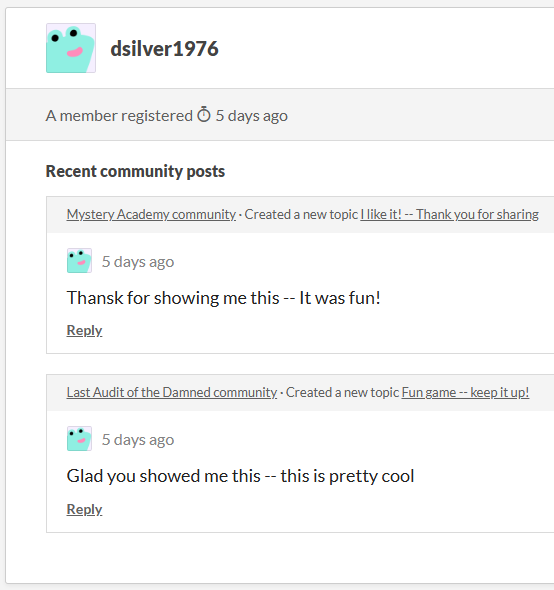

- I have been accused of cheating because checks notes I wanted people to play my game, took action to get people to play my game, and was successful in bringing people who weren’t already invested in IF to at least take a look. I thought that was the whole point of what we are doing. As far as being on the accounting thread on Reddit . . hell yes! I told a story about a castaway accountant who needed clues within a pirate’s ledger to get himself out of his predicament. Fun! Not enough stories about accountants, in my opinion. I am stunned that anyone would think this a particularly novel idea. In what world would connecting with players be considered wrong? Bizarre. If you wanted this competition, or IF game play in general, to be limited to only card-carrying members of this forum, that could have been easily achieved by requiring a valid, pre-registered username. That seems silly. The only response to us bringing in a couple of thousand new players to the IF community (without writing porn) should be “Thank you”.

Last Audit and Mystery Academy results

Some folks are speculating on our gameplays. Here are the numbers:

Total gameplays, 1,754 (around 1,200 of that for the Last Audit). 7k page hits, so a 20% or so looky-loo to player conversion rate. Only a tiny number of our gameplays came from this chat board/url/associated urls. The majority initially (a month ago) came from Reddit and Discord, and for the last month, a lot came from email threads that seemed to have been passed around, we’re pretty sure, among the indie gamedev community that is still picking up steam.

Some on this thread thought that 1,700 gameplays are gangbusters or something. Which is sad. I don’t feel that way. I am embarrassed we had so few. The average Roblox game (by which I mean the 10 millionth most popular blocky amateur effort) gets 10k plays alone.

HOWEVER

Underneath the abysmal player numbers, there were 3 encouraging pieces of data:

– Initial engagement was through the roof. When we tested trad IF, both with and without an LLM parser, initial new player abandonment was north of 99%. We tested on all sorts of players, but unless they had grown up playing IF, new players were almost 100% unwilling to learn the ins and outs of a decades-old parser. With these games, we had a LOT of initial engagement and pretty good continued gameplay before abandonment.

– There is a long tail to this type of game. I haven’t done the numbers yet, but an initial inspection shows a decidedly determined group of players, measuring in the single digits to be sure, who poured hours into these two games. So, from our perspective, there IS a new audience for this genre if we can figure out the right mechanics. Maybe we are wrong about that, but we won’t apologize for trying.

– My G-d people are creative. We are lucky in that, being a server-side game, we get to aggregate player moves to help make the game better. I am heartened, nay, positively tickled by the amazing responses to puzzle challenges, especially the “tell your own riddle” challenge, the interrogate a suspect” challenge, and the “deal with the exploding kittens” puzzle. Folks spend a lot of time finding, well, quite frankly, unexpectedly creative solutions to all of these, including ones that weren’t in our original plans for the game! I think we’ve taken a step closer to learning what new games can be played on this platform, and one new player dynamic: creative problem solving.

On the moral tone being struck on the use of AI

I promised myself not to engage on this, But, sigh, here it goes:

An example: I never play Twine games. I find them so stultifyingly boring and limiting that I don’t think I’ve made it to the end one yet. But it has never occurred to me to take a moral stance on it. I just figure “there are players for that”. Just like there are players for jumbles, wordles, RPGs, etc.

LLMs will find a place within Interactive Fiction. I don’t know how, but they will and we are experimenting on finding the paths where that is most impactful. We have already proven that people are willing to engage and start playing in a way they are not willing to do with old-style IF. There are players for IF with LLMs.

If I cannot appeal to your better angels, let me appeal to your common sense: encouraging the flowering of a new type of IF means, ultimately, you will have more players for your type of IF. Expanding the universe of players takes a village, and we all benefit.

Taleweaver++’s next Move

In the next couple of days, we will be announcing the beta availability of Taleweaver++ and taking applications for creators/writers who want to try their hand at it.

As the code is a tad shabby-looking, we’re looking for some innovative types who would like to experiment, and aren’t too picky about their user interface to begin with.

Some of the features of this platform:

No-code/low code: Spend your time as a writer, not a coder. (though you’ll also spend some time as director, stunt coordinator, dialogue coach, prop mistress, and set designer.)

New types of puzzle spaces and creative directions to explore.

Instant gratification for the non-IF initiated. Game is playable from the first minute.

Variable A/B testing.

Tons of available players who are looking for new experiences and puzzles (your mileage may vary)

Some of the drawbacks of this platform:

It can be hard to control the output.

Not all the guardrails are there for perfect behavior.

The game experience is still slow because LLMs are still comparatively slow. This is the single most common complaint about our games and it is a valid one (Chris finds this funny since playing Zork on a C64 with a 1541 disk drive regularly took 90 seconds per move. But the world moves on, and instant response IF is the standard now!)

Must be able to deal with the fact that AI in general is polarizing and therefore you’ll never be able to please 100% of the people. And that’s ok.