Or if your tastes run differently:

Nice. But right now, I’m interested in gritty and grainy ![]()

How do you give Midjourney an image for inspiration? I’d like to try this.

My feeling is that generated people, eg for a game, will be a tough call for ai (at the moment). background scenes might be the best at this stage.

I’ve been keeping an eye on generated humans. I downloaded their demo, but it seemed to contain a giant bunch of people samples, and i didn’t get to actually generate a person myself. So maybe this isn’t ready yet either ![]()

All you have to do is include the URL to the image in your prompt. You can use multiple images.

I’m actually using some in my WIP. I find them easier than background images, which never match my vision for the scene.

That’s really interesting. Perhaps it’s better than i thought. Can you get it to generate different face expressions and so forth. Any examples?

Thinking back on it much later, it doesn’t have that crazy Douglas Adams (also cartoonist) style that I feel it should have.

It’s not great at it, but it can do it a little bit. More extreme expressions (e.g., laughing) tend to look unnatural.

Thanks. Encouraging pictures. The irises in the top one are a bit wrong, but fixable. Jpeg artefacts are a bit high. are these the original?

Yeah those are original, no touch ups.

Perhaps you already have it into account, buy I saw in some places the text “jpg artifacts” as negative prompts, if it can help (althought I can’t appreciate those told by @jkj_yuio ).

I find very useful this site, where you can see images generated by AI, and more useful, the prompt used to create them.

By the way, @rileypb , do you know if this is also posible some way with Stable Diffusion AI?

Is what possible, specifically?

Sorry, I was talking about this stuff of generate different images of the same model.

I’m pretty sure not, since it doesn’t seem to have the capability to use image prompts? But I’m not 100% certain.

Even with Midjourney the ability to create multiple images of the same character is pretty limited. The facial features drift a lot when you change backgrounds, pose, expression, etc.

Not exactly cover art, but I got this (I guess a playing card?) when prompting Dream Wombo with:

You are standing on the ramp leading down from the legendary starship heart of gold to the Blighted Ground of the legendary planet Magrathea.

For anyone following weird use cases for AI, you’ll probably enjoy Nothing, Forever. It’s a perpetual Twitch stream broadcasting a procedurally generated version of Seinfeld. Apparently the creators combined animation, writing, and audio machine learning systems to generate every part of the show. It becomes apparent very quickly that the human touch is missing, but the live Twitch chat reacting to each “joke” makes for a fun juxtaposition.

Glad I found this thread. Despite the ethics surrounding AI, I enjoy using it occasionally for inspiration or fun. Also, from what I’ve read, Canva and Icons8 use their own images to train their AI models.

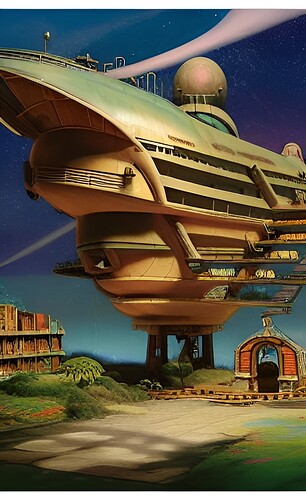

Anyway, I’m sharing my findings with you:

Copilot - as for me, the most aesthetic one.

Icons8 - an awesome generator for 3D clay models, but unfortunately, it’s not free.

Canva - not the greatest, but it’s good for inspiration.

Another possibility is OpenArt (not free but allows trial accounts). Here’s an example of retropunk noir:

(Prompts can be very detailed. The one I used to generate this one is Photorealistic portrayal of a hard-boiled 1930s private detective in a trench coat and fedora hat, cyberpunk urban setting, dramatic neon lighting, high contrast shadows, noir atmosphere, rain-soaked streets, futuristic skyscrapers with holographic ads, glowing cityscape in the background, vibrant blues and purples, somber and gritty mood, steam rising from manholes, ultra-detailed, highly realistic textures, cinematic composition, immersive and stunning visuals).

Those pictures are really good, especially the last one.

A lot of things have already been said about AI. So I’m going to say some other things about AI imaging’s suitability for IF;

Firstly, i like to put graphics in IF. Not everyone does. But in my mission to improve IF visuals, I’m still not seeing AI deliver enough. I’d agree that if you try for long enough with prompts, you might get enough pictures to, say, make a chapter-by-chapter meta image set.

But the problem is consistency. With prompts alone, I can’t see how you can make AI generate the same person in different situations as you’d need for a game. Almost every game, in fact.

As an aside, if you pick a known celebrity or famous actor, this can work because AI knows that person. But for a unique and randomly generated person, I’m not seeing this working at all right now.

And it get worse;

If your game graphics is built with layers, kind-of VN style, you’re going to want backgrounds and foregrounds to composite together. AI is currently totally failing to make people or objects with background transparencies. You can ask it for a blank background, but that’s not the same thing. You’re going to have to “cut out” that person manually. And no, I’ve failed to find sufficiently accurate AI cut-out facilities - There are lot’s on the internet, but they don’t work well enough for real use.

I made this simple video of how I make a composite of just one character and a background. Obviously this generalises to multiple layers. Personally, I think it’s essential to be able to do this graphically for game production.

Hopefully this explains what i’m talking about:

For this sort of thing if you’re serious about it you want to make a LORA for the character. That’s what I had been planning on for the documentation/feelie art for my WIP before AI tools in general became so radioactive.

Basically you draw a slightly more detailed model sheet than you would in general, train a model on it, and then use that model for generation. Workflow is something like:

- Make a model sheet

- Train a LORA on the model sheet

- Sketch out the scene/pose/whatever you want

- Use

img2imgor the equivalent to produce an intermediate - Hand edit the intermediate for the final image

For my WIP I was also going for a sort of medieval woodcut look for most of the art, so I separately created a LORA trained on public domain images of historical woodcuts as a sort of style filter to apply to the touched-up image.

Creating the character and style LORAs requires more time up front, but saves a lot of time with mostly fiddly stuff in individual images. Like applying a woodcut effect to an image is something you can do in GIMP or Photoshop with just “normal” filters and layers and so on…and that’s how I’m doing it now…but that might take a half an hour or so per image, versus a couple seconds for img2img, and you’d be hard pressed to distinguish between the two.

This of course requires more hands-on stuff (as opposed to just prompt bashing via txt2img or the equivalent) but it’s very good at producing what you actually want instead of whatever a random roll of the generative dice gets you.