Yeah, sorry, I didn’t think: I should have quoted into a separate thread than the “positive/neutral thing today” one, since I was going more into “AI discussion” territory.

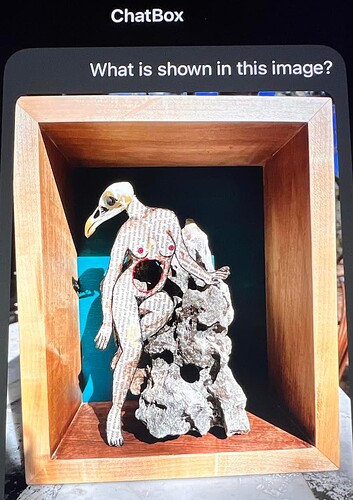

@alyshkalia I don’t know much about the algorithmic details of the image classification and creation stuff except that, again, it’s going to be some sort of process where they train a pattern-recognition algorithm on lots and lots of data (presumably human-labelled images). And that algorithm is going to figure out its own categories and weights and you can maybe sort of feel out the shapes of them after the fact but they’re not necessarily ones that a human would come up with. So they’re susceptible to the same kinds of confusions and “context, not meaning” issues.

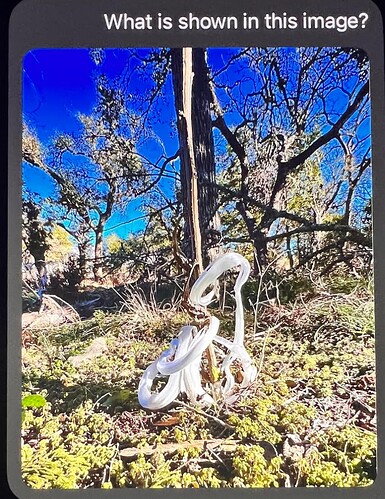

One example from a few years ago that gets thrown around a lot is someone took a photo of a green field with white sheep on it, which was recognized correctly, but if you paint the sheep orange, then it gets recognized as flowers instead. Or… with some of them they recognize text pretty well, and they don’t necessarily think about contrast and color differences the way people do, so you can put almost-completely-transparent text on an image, so a human wouldn’t notice it, but the algorithm will take it as a label and tell you it’s a picture of an apple when it’s actually a piano or whatever… so I wonder if the connection is “people in art gallery photos online are usually dressed up” or something?

So yeah. One problem with any of these sorts of techniques is it’s not like writing a program where you can (theoretically) debug it and change the bit that’s not working: you train it on a big pile of examples and it converges on the patterns for itself, and you just have a giant array of numbers (what are we up to now; I think the small ones that you can run on your computer are like 7 million parameters?) and you have no real idea of what those numbers mean. So it’s hard to control. Not good for when you need reliability.

And there are all kinds of weird corner cases that are turning up, like people recently found with the text ones that if you give it a prompt that’s just one word over and over (or if you ask it to repeat a single word endlessly? Can’t remember, didn’t pay that much attention) then it’ll often start just dumping text from the training data, including like names and addresses and stuff. And that was a big deal because one of the arguments for training AIs not being copyright infringement is that the data isn’t reproduced in the model, the model just kind of abstractly learns from it.

Anyway. If you can live with the companies not paying for the training data, and icky labor practices having Kenyans or whatever hand-tag and look at all the bad stuff to try and filter it out for terrible pay, and the ludicrous electricity usage… they do some impressive things. With the venture capitalists footing the bill, it’s easy to see why people get really into this stuff. I know a bunch of programmers who use it to get them in the ballpark of what they need to know for a new library: they know it’s probably going to be wrong in some ways, but it’ll usually get close enough that they know what the functions are called, and what the code more-or-less looks like, and then they know what to search in the real documentation, so it’s faster to start by having ChatGPT summarize the specific thing they’re looking for than to wade through a bunch of long-winded tutorials that are more likely to be fully correct.

Dunno. I follow some people who do research on these things (on both sides of the issue) because the math and algorithms are fascinating, but generally don’t touch it myself if I can help it, so who knows if I have even the broad strokes roughly correct here.