And the conversation is about today. What we can do now in order to manage this influx of low-quality AI slop. Sure, AI slop may become less sloppy in the future, but we are not in the future, we are in the present. And presently, LLMs are not producing anything meaningful.

This exposes exactly why it’s different: these games are not popular.

That may be the topic you’re interested in talking about, but IMO it’s rather orthogonal to the question of whether IF community members should be allowed to use generative AI in work they submit to the IF community’s yearly competition.

As far as I’m aware, no tech billionaires or Microsoft employees are submitting games to or voting in the IFComp.

As far as I’m aware, nobody is asking to ban use of Macbooks by IF authors due to Apple’s myriad sins.

It feels like you deliberately trying to misunderstand. I’m not a native english speaker, but I understand perfectly the meaning.

Yes, fair point. I can’t deny that if generative AI is banned in the IFComp moving forward, from what I’ve seen so far, not much of value will be lost.

I don’t think I misunderstand you (or at least, not on purpose).

You are saying that generative AI should be banned in the competition because Microsoft (among others) are currently making a massive marketing push to promote their AI tools, with potentially significant negative societal consequences, right?

Let me try a different tack: suppose we grant your hypothetical that Microsoft had put a dedicated Twine button in the Windows 7 task bar. Would that be a reason to ban Twine from the competition?

Anyway, I didn’t mean to wade into a huge argument here… I appreciate Kastel, Bruno, and others sharing their thoughtful perspectives. While I’m (still) not entirely comfortable with the stated rationales, I also don’t strongly object to an AI ban moving forward (which seems quite likely, based on the tenor of this thread)!

But Microsoft wouldn’t do that. That’s the point.

Tangentially, here’s maybe a slightly different perspective on the issue that I’ve been percolating. Apologies if it’s obvious or duplicative.

Tech CEOs like to claim that AI gives developers a 10x productivity boost. But, many developers on the ground have found that while AI can generate copious (I dare say infinite) amounts of code, it still all needs to be reviewed by humans at a significant time cost.

The same CEOs like to point out how AI lets people communicate more effectively by using AI as a writing tool. And yet, today I saw the first study suggesting the organizational costs of employees having to spend time reading an infinite amount of blandly homogeneous but secretly incorrect writing. (That was the thing that finally prompted me to offer an opinion.)

Someone further up the thread has mentioned other gaming communities banning AI - and that is no doubt due at least partly to the flood of AI generated content being aimed at forums, open-source bug report reviewers, etc, and placing additional strains on already limited volunteer resources.

Traditionally, any art worth viewing (or watching or listening to or playing) has required years of investment on the part of the artist(s), while a viewer might spend no more than seconds or minutes or hours with it. (I read a lot of comics and sometimes I feel guilty about how quickly I read them, knowing how much time an artist spent drawing every panel.)

With generated AI, the “creator” may spend less time making a thing than the audience is expected to spend consuming it, which completely inverts the value proposition between creator and viewer that has existed for all of human history.

It seems to me that one of the sociological implications of gen AI is a lot like one of the chief traits of social media, where we eventually discovered that services being “free” meant that we were the product. Because, attention economy. Just like social media, AI generated content demands an undue amount of our time and attention and delivers very little in return.

That’s your opinion today, about today’s tools.

That’s not the argument, no – the argument for banning it is… well, a lot of other things dicussed upthread. Pointing out that ‘AI’ is a corporate product and a broad societal issue is just saying that it’s a very different situation from past intra-IF-community drama about tools like Twine, and analogizing the two isn’t really productive.

A major reason here is that we are seeing the effect of ‘AI’ across all these disparate fields, and we don’t like what we see!

I highly doubt anyone is going to look back in a decade and see the current crop of AI games as underappreciated gems.

I think this is the article mentioned - AI-Generated “Workslop” Is Destroying Productivity.

Updated: Greatest irony of the AI age: Humans being increasingly hired to clean AI slop

Yup, that’s the study. Everything I cited can be backed up with numerous articles and I only didn’t share any because once I started it would’ve been just a list of links.

As are snippets blindly pasted from Stackoverflow, or from forum posters, or from anywhere else. If the concern was only quality (I know it isn’t) then I think AI generated or assisted code, when used by someone who otherwise knows what they are doing, are no worse than most other sources of code help.

There are a couple of important differences with copy-pasting Stack Overflow code:

- You know where it came from.

- Other human beings have likely looked at it and not found major fault with it, or else they would have commented on it.

That’s the real problem with anything that comes out of an LLM: you have no idea of provenance and no way to estimate correctness.

Yeah, the idea behind Stack Overflow is that other expert humans are looking at it and evaluating how good it is. Plus, LLMs are very good at mimicking the traits we associate with expertise, so it’s hard to tell at a glance if anything they produce is usable or not; with humans, it tends to be fairly clear whether someone knows anything about the topic.

I’d like to amend this with the common-sense extension that algorithms written and freely given away by humans are fine to use. Posterization/color-reduction, rotation, and compositing/translucent-text algorithms used in the creation of cover art are obviously not being written by hand by the author, but they WERE hand-written by someone. I think it’s a real shame that AI has poisoned the “procgen” aesthetic well that you see in something like, say, A Mind is Born.

Yeah, that’s where I think the creativity aspect comes into it. When I open GIMP and tell it to rotate some text, I’m not expecting the program to do anything creative, I’m expecting it to rotate the text exactly the same way every time.

I can’t count how many times someone claimed a solution on Stack Overflow was correct and I tried it and it completely failed. Or, there were good solutions buried in 50 replies.

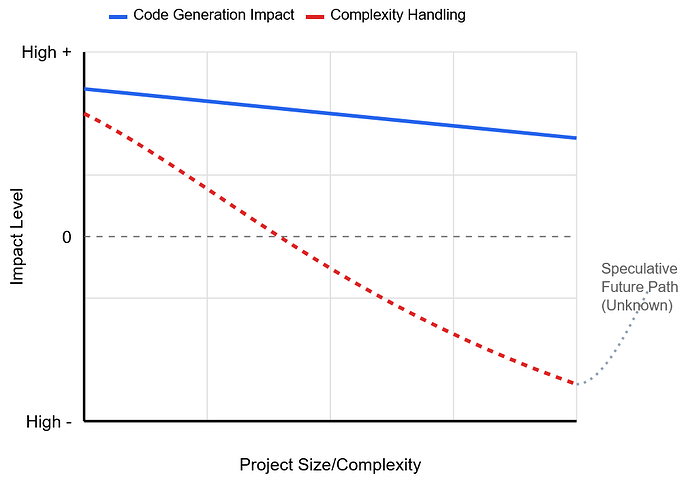

I created a diagram about complexity in GenAI back in February. I think we’ve hit the upswing, but it’s still at the bottom of impact level. For anything small, GenAI will crush your request in any programming language you target.

The biggest impact is when you adhere to TDD. Then things get interesting.

I have to admit that I really don’t understand what your graph shows…

But, I don’t doubt that “AI” is/will be very good at writing code, playing chess, solving math problems and so on. On the other hand I’m more doubtful that it is/will be as good at creating art.