I think it’s important to see if AI would be useful if it were ethical. “Unethical” and “low quality” aren’t always the same thing, and it can be messy to confuse the two (like when people find out an actor did something bad and say, “well I never liked his movies”, implying bad actors are always bad people).

In this case, I think generative ai use for games is both. The prose given here is definitely serviceable and is probably 3/5 writing wise.

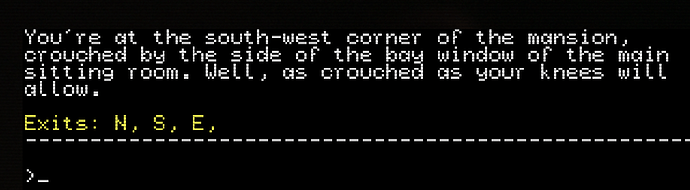

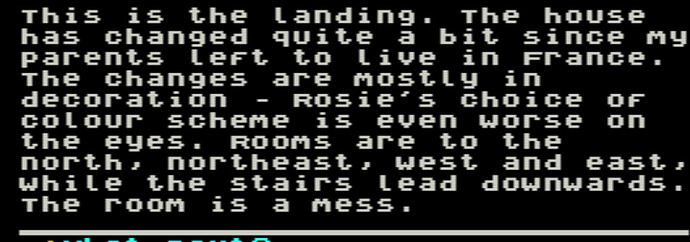

But building a game on this prose would be rough. There are a lot of extraneous details here, like scurrying sounds and shadows. This invites players to “listen to scurrying” and “search shadows” and so on. If the author implements every reasonable action, it would take up a ton of code. But since ai wrote this, those things serve no purpose but window dressing. So now the player has spent a lot of actions trying to interact with the world and guessing what is important to further the story and the game, and failing.

So the author has spent a ton of work and so has the player and no one is happy.

Or, the author doesn’t implement everything, and it’s buggy.

If you look at popular parser games, they tend to write in a way to eliminate extraneous scenery objects. Wizard Sniffer is very barebones. Ryan Veeder is similar, if you read his design philosophy.

Other people make lush games but everything ties in. Maybe there’s a vampire in the attic, so we see bat themed things downstairs and a coffin in the basement and so on.

Like most ai generated text, what’s here looks great but doesn’t hold up to inspection. It might actually be human written as a test for us, but if it is I don’t think it’s useful anyway. The difference is a human can learn and grow. This text kind of reminds me of Horrors of Rylvania.

A final thing is that all of the descriptions are almost the same size. It’s nice to vary those like the way a bio author varies paragraphs.